Introduction

ChatGPT has become a widely used tool for writing assistance, research support, coding help, and everyday problem-solving. As its user base grows, so do questions about how it works, what constraints shape its responses, and why performance can vary from time to time. Rather than viewing these issues as flaws, it’s useful to understand them as design and operational realities of a large-scale AI system.

This article takes an informative look at ChatGPT’s limits, speed concerns, and access models, helping readers form realistic expectations about how and why the system behaves the way it does.

Understanding Usage Limits and System Constraints

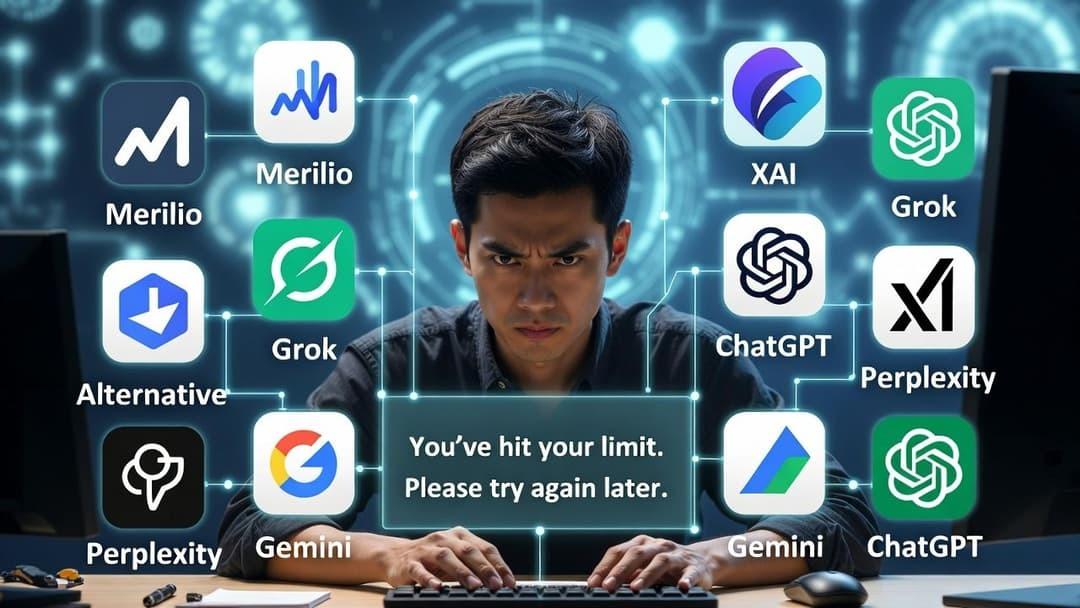

One of the most common questions users ask relates to the chatgpt limit—the practical boundaries placed on usage. These limits can involve how many messages a user can send in a given period, how long a conversation can continue before context is reduced, or how much computational effort is allocated per request.

Such constraints exist to balance system stability and fairness. Because millions of users may be interacting with the service simultaneously, limits help prevent any single user or group from monopolizing resources. They also ensure predictable performance and help manage operational costs. Importantly, these limits are not fixed forever; they may change as infrastructure improves or as usage patterns evolve.

Understanding that these caps are part of responsible system management can help users plan their interactions more effectively, such as by asking clearer questions or consolidating related requests.

How ChatGPT Processes Requests

To appreciate performance and limitations, it helps to know what happens behind the scenes. ChatGPT does not retrieve prewritten answers; instead, it generates responses token by token based on patterns learned during training. Each request involves multiple steps: interpreting input, evaluating probabilities across a large model, and producing a coherent output.

This process requires significant computational power, especially for longer or more complex prompts. Additionally, the system must maintain conversational context, which adds to processing overhead. These technical realities mean that response time and depth are closely tied to both the complexity of the query and current system load.

Factors That Affect Response Speed

Users often wonder why is chatgpt so slow at certain times. Several factors can contribute to slower responses, and most are external to the user’s individual request.

High traffic periods are a primary cause. When many users are active simultaneously, requests may be queued or processed more cautiously to maintain overall system stability. Network latency, server maintenance, or updates can also affect speed. On the user side, slow internet connections or browser-related issues may add to perceived delays.

It’s also worth noting that more detailed or multi-part questions naturally take longer to process. A short factual query and a nuanced analytical prompt do not demand the same level of computation, so response times will vary accordingly.

Accuracy, Safety, and Content Moderation

Another aspect influencing how ChatGPT behaves is its emphasis on safety and accuracy. Before generating a response, the system applies multiple checks to reduce the risk of harmful, misleading, or inappropriate content. These safeguards are essential, particularly given the broad range of topics users may raise.

While these measures improve reliability, they can also limit the type of information provided or result in more cautious phrasing. This is not a sign of uncertainty but a deliberate design choice to prioritize responsible use. Understanding this balance helps explain why some answers may appear conservative or general rather than speculative or definitive.

Access Models and Trial Availability

Questions about access often include interest in a chatgpt free trial, which reflects a broader curiosity about how users can explore the system before committing to extended use. Trial or free-access models typically offer a subset of features or limited usage, allowing users to evaluate the tool’s capabilities.

These models are structured to introduce users to the technology while managing demand and operational costs. Feature differences between access tiers are usually tied to resource allocation rather than content quality. From an informational standpoint, understanding these access structures clarifies why some users experience different limits or response behaviors than others.

Setting Realistic Expectations for AI Tools

ChatGPT is a powerful language model, but it is not a human expert, a real-time database, or an infallible authority. Its outputs are shaped by training data, algorithms, and system constraints. Recognizing these factors helps users interpret responses appropriately and verify important information independently when needed.

By framing ChatGPT as a supportive tool rather than a definitive source, users can make better use of its strengths—such as summarization, idea generation, and explanation—while remaining aware of its boundaries.

Conclusion

Understanding how ChatGPT works, including its limits, performance variations, and access options, leads to more effective and informed use. Usage caps help maintain fairness, processing demands explain speed fluctuations, and access models balance availability with sustainability. None of these elements diminish the value of the tool; instead, they reflect the complexity of operating a large-scale AI system responsibly. With clear expectations and thoughtful interaction, users can continue to benefit from ChatGPT while navigating its practical realities.

Media Contact

Company Name: Merlio

Contact Person: Merlio Team

Email: Send Email

Address:200 Rue de la Croix Nivert

City: 75015 Paris

Country: France

Website: https://merlio.app/